AKS - Nginx Ingress Controller Timeout

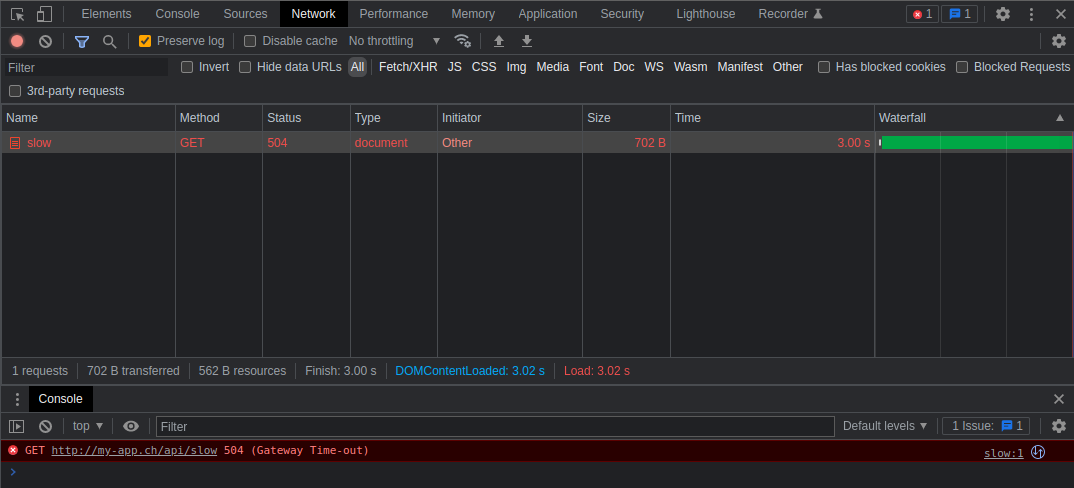

We run various applications in Kubernetes with the Azure Kubernetes Service (AKS). Most of the time this works without any problems. Recently, however, we had the problem that one was no longer working properly and the http request, triggered by an angular application, was always terminated with an 504 Gateway Timeout.

The application runs as a Kubernetes Service behind a NGINX Controller. To get to the root of the problem, it is probably helpful to take a closer look at the ingress logs with the help of kubectl logs:

kubectl logs -n ingress-nginx ingress-nginx-controller-6d5f55986b-q9t7k -f

2022/02/07 19:52:42 [error] 172#172: *3825 upstream timed out (110: Operation timed out) while reading response header from upstream, client: 192.168.49.1, server: my-app.ch, request: "GET /api/slow HTTP/1.1", upstream: "http://172.17.0.2:80/api/slow", host: "my-app.ch"

2022/02/07 19:52:43 [error] 172#172: *3825 upstream timed out (110: Operation timed out) while reading response header from upstream, client: 192.168.49.1, server: my-app.ch, request: "GET /api/slow HTTP/1.1", upstream: "http://172.17.0.2:80/api/slow", host: "my-app.ch"

2022/02/07 19:52:44 [error] 172#172: *3825 upstream timed out (110: Operation timed out) while reading response header from upstream, client: 192.168.49.1, server: my-app.ch, request: "GET /api/slow HTTP/1.1", upstream: "http://172.17.0.2:80/api/slow", host: "my-app.ch"

192.168.49.1 - - [07/Feb/2022:19:52:44 +0000] "GET /api/slow HTTP/1.1" 504 562 "-" "Mozilla/5.0 (X11; Fedora; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.80 Safari/537.36" 459 3.003 [myapp-myapp-80] [] 172.17.0.2:80, 172.17.0.2:80, 172.17.0.2:80 0, 0, 0 1.000, 1.002, 1.001 504, 504, 504 77e32c15c5339f90fb10c7f195f887e4

The logs quickly show where the problem lies. There is obviously a problem with the communication between the NGINX Ingress Controller and our application. It looks like the API call to http://172.17.0.2:80/api/slow runs into a timeout.

The configured timeout in nginx can be easily found out. For this we only have to output the file /etc/nginx/nginx.conf

kubectl exec -it -n ingress-nginx ingress-nginx-controller-6d5f55986b-dwnw8 -- cat /etc/nginx/nginx.conf

There are various options to configure the timeouts on the nginx. Relevant for us is the property proxy-read-timeout and this was set to 60s, which is the default.

proxy_read_timeout 60s;

Unfortunately a quick fix for the affected api was not possible, so the timeout of the ingress had to be increased. In our case, we’ve simply modified the YAML file by setting the annotation nginx.ingress.kubernetes.io/proxy-read-timeout to 180 to temporarily solve the problem.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

labels:

app: myapp

name: ingress-myapp

namespace: myapp

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

nginx.ingress.kubernetes.io/proxy-read-timeout: "180"

spec:

ingressClassName: nginx

rules:

- host: my-app.ch

http:

paths:

- path: /(.*)

pathType: Prefix

backend:

service:

name: myapp

port:

number: 80